Trends in Information Technology

In modern time, there is a boom of application of and progression in information technology. Information technology has vital part of our daily life. Association of America described information technology as “the study, design, development, application, implementation, support or management of computer-based information systems." Information technology made great revolution in the arena of business and society and worked as change agent. Resolved various economic and social issues. From earlier time, Information and information technology was significant for human growth and development. Humans have had to deal with the problem of gathering information, processing information, storing information, and using information. As information spread all over the world and progression were made to information technology that decreases the cost of information and its distribution, several things became obvious. The Internet of things, big data, cloud, and cyber security will continue to govern the information technology scene.

Improvement and application of information technology are continually changing. There are numerous recent trends in the information technology which are mentioned below:

1. Cloud Computing:

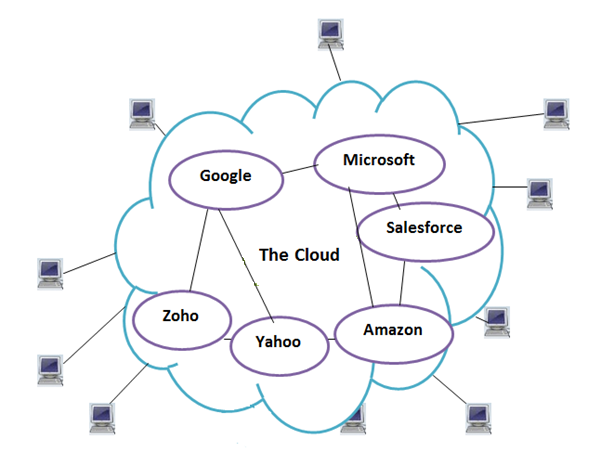

Cloud computing is most dominant concept in information technology. Clouding computing is described as utilization of computing services that is software as well as hardware as a service over a network. Characteristically, this network is the internet. In simple term, Cloud Computing is Internet Computing in which the Internet is normally visualized as clouds. With Cloud Computing, users can access database resources through the Internet from anywhere, for as long as they need, without worrying about any maintenance or management of actual resources. Cloud computing is a very independent platform in terms of computing. Common example of cloud computing is Google Apps where any application can be accessed using a browser and it can be deployed on thousands of computer through the Internet.

Cloud computing is a computing pattern, where a huge pool of systems are connected in private or public networks, to offer dynamically scalable infrastructure for application, data and file storage. This advanced technology helps in decreasing the cost of computation, application hosting, content storage and delivery. Cloud computing is a practical method to experience direct cost benefits and it has the potential to transform a data centre from a capital-intensive set up to a variable priced environment. The notion of cloud computing is based on basic principal of reusability of IT capabilities. The difference that cloud computing brings compared to traditional concepts of "grid computing", "distributed computing", "utility computing", or "autonomic computing" is to broaden horizons across organizational boundaries. According to Forrester, cloud computing is “A pool of abstracted, highly scalable, and managed compute infrastructure capable of hosting end customer applications and billed by consumption."

Cloud computing

Historical review of cloud computing:

The notion of an "intergalactic computer network" was initiated in the decade of sixties by J.C.R. Licklider, an American computer scientist, who was responsible for enabling the development of ARPANET (Advanced Research Projects Agency Network) in 1969. Cloud computing has developed through a number of phases over the years, which include grid and utility computing, application service provision (ASP), and Software as a Service (SaaS). Since the sixties, cloud computing has developed along a number of lines but since the internet only really started to offer significant bandwidth in the nineties, cloud computing for the masses has been something of a late developer.

The Principles of Cloud Computing:

Cloud computing is dissimilar from traditional web service with regard to its principles

These principles include:

- Resource pooling: Cloud computing providers fix huge economies of scale through resources pooling. They put together a massive network of servers and hard drives and apply the same set of configurations, protection and the works for them.

- Virtualization: Users do not have to care about the physical states of their hardware nor worry about hardware compatibility.

- Elasticity: Addition of more hard disk space or server bandwidth can be done with just a few clicks of the mouse on-demand. Geographical scalability is also available in cloud computing. User can select to replicate data to several data centres around the world.

- Automatic/easy resource deployment: The user only needs to choose the types and specifications of the resources he require and the cloud computing provider will configure and set them up automatically.

- Metered billing: Users are charged for only what they use.

General features of cloud computing:

Cloud computing has following characteristics:

- High scalability: Cloud environments allow servicing of business requirements for larger viewers, through high scalability.

- Agility: The cloud works in the ‘distributed mode’ environment. It shares resources among users and tasks, while improving efficiency and agility (responsiveness).

- High availability and reliability: Availability of servers is high and more reliable as the chances of infrastructure failure are minimal.

- Multi-sharing: With the cloud working in a distributed and shared mode, multiple users and applications can work more efficiently with cost reductions by sharing common infrastructure.

- Services in pay-per-use mode: SLAs between the provider and the user must be defined when offering services in pay per use mode. This may be based on the complexity of services offered. Application Programming Interfaces may be offered to the users so they can access services on the cloud by using these APIs.

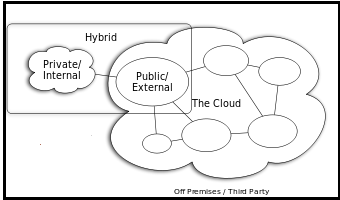

Types of cloud computing environments:

The cloud computing environment has numerous clouds.

Public clouds: This environment can be used by the common public. This consists of individuals, corporations and other types of organizations. Characteristically, public clouds are governed by third parties or vendors over the Internet, and services are offered on pay-per-use basis.

Private clouds: Another category of cloud computing is private clouds which resides within an organization and is used entirely for the organization’s benefits.

External clouds: This cloud computing environment is external to the boundaries of the organization. Some external clouds make their cloud infrastructure available to specific other organizations, but not to the general public.

Hybrid clouds: This is a blend of both private (internal) and public (external) cloud computing environments. In a hybrid cloud, users can leverage third party cloud providers in either a full or partial manner, increasing the flexibility of computing.

Types of cloud computing

Models of cloud computing:

Cloud computing offers three types of broad services mainly Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS).

- Software as a Service (SaaS): In this model, a complete application is offered to the customer, as a service on demand. A single instance of the service runs on the cloud and multiple end users are serviced. On the customer’s side, there is no need for upfront investment in servers or software licenses, while for the provider, the costs are lowered, since only a single application needs to be hosted & maintained. Presently many huge companies provide SaaS such as Google, Salesforce, Microsoft, Zoho.

- Platform as a Service (Paas): In this model, a layer of software, or development environment is encapsulated and offered as a service, upon which other higher levels of service can be built. The customer has the liberty to shape his own applications, which run on the provider’s infrastructure. To meet manageability and scalability requirements of the applications, PaaS providers offer a predefined combination of OS and application servers, such as LAMP platform (Linux, Apache, mysqli and PHP), restricted J2EE, Ruby etc. Some examples are Google’s App Engine, Force.com.

- Infrastructure as a Service (Iaas): Third model of cloud computing is IaaS which offers basic storage and computing capabilities as standardized services over the network. Servers, storage systems, networking equipment, data centre space are pooled and made available to handle workloads. The customer would naturally deploy his own software on the infrastructure. Popular examples are Amazon, GoGrid, 3 Tera.

There are many advantages of cloud computing.

- Cloud computing decreases IT infrastructure cost of the company.

- Cloud computing supports the model of virtualization, which enables server and storage device to be utilized across organization.

- Cloud computing makes maintenance of software and hardware easier as installation is not required on each end user’s computer.

Some issues for utilizing cloud computing technology are privacy, compliance, security, legal, abuse, IT governance. Though it is factual that information and data on the cloud can be accessed anytime and from anywhere at all, there are times when this system can have some serious dysfunction.

The other major issue in the cloud is that of security issues. Before adopting this technology, user should know that he will be surrendering all company’s sensitive information to a third-party cloud service provider.

This technology is prone to attack. Storing information in the cloud could make company susceptible to external hack attacks and threats. It is well recognized that nothing on the Internet is completely secure and hence, there is always the lurking possibility of stealth of sensitive data.

It is said that Cloud computing offers the facility to access shared resources and common infrastructure, providing services on demand over the network to perform operations that meet changing business requirements. In this Internet-based computing, hardware and software resources are provided to user on-demand. It is a by-product and consequence of the ease-of-access to remote computing sites provided by the Internet. The location of physical resources and devices being accessed are normally not known to the end user. It also provides facilities for operators to develop, deploy and manage their applications ‘on the cloud’, which include virtualization of resources that maintains and manages itself.

2. Mobile Application:

Another evolving trend within information technology is mobile applications such as software application on Smart phone and tablet. Mobile application or mobile app has got huge success since its inception. Mobile applications are a fast rising segments of the global mobile market, which consist the software runs on a mobile device and performs certain tasks for the clients. Because of the various functions including user interface for basic telephone and messaging service as well as advanced services, mobile Apps are extensively used by customers. Mobile Apps are a large and continuously growing market and served by an increasing number of mobile App developers, publishers and providers. Many research reports signified that the global market for mobile applications will explode over next two years.

Mobile application are designed to run on Smartphone, tablets and other mobile devices. They are available as a download from various mobile operating systems like Apple, Blackberry, and Nokia. Some of the mobile app are available free where as some involve download cost. The profits collected is shared between app distributor and app developer. From technical perspective, mobile Apps can be divided by the runtime environment they are executed: Native platforms and operating systems, such as Symbian, Windows Mobile and Linux. Mobile Web/browser runtimes, such as Webkit, Mozilla/Firefox, Opera Mini and RIM. Other managed platforms and virtual machines, such as Java/J2ME, BREW, FlashLite and Silverlight.

IPhone is a revolutionary technology of global mobile phones. It has set a platform to develop all types of mobile Apps helping paint a better picture in mobile computing. The benefits of iPhone is that it is numerous with multi-touch interface, accelerometer, GPS, proximity sensor, dialer, sqlite3 database, OpenGL ES, Quartz, Encryption, Audio ,Game and Animation , Address book and Calendar including latest features of iPhone Gaming . Windows mobile application is also the newest technology in which every mobile system should process. This technology permits the user to browse the internet, send receive mails, check with schedules contacts, and prepare presentations, in short manage whole business with the usage of mobile. Android has also generated latest trend in mobile application with special technical interface in the mobile world. This latest advancement helps the publishers to deliver applications directly to the end user and easy downloads of applications can be achieved. BlackBerry is also a Smart phone in mobile world. BlackBerry Smartphone are the most desired integrated communication device. This excellent application not only fulfil the need of one person but the usage can extend to an entire enterprise whether it is a small scale or a giant industry.

3. User Interfaces:

The user interface of an application usually encompasses those objects that a user sees and interacts with directly on their screen such as the document space, menus, dialog boxes, icons, images, and animations. A user interface is the system by which people (users) interact with the computer. It can contain both hardware and software components. Though, the user interface of an application is only one facet of the overall user experience. Other aspects of the user experience that are not visible to the user, but are essential to an application and critical to its usability, include start up time, latency, error handling, and automated tasks that are completed without direct user interaction. Commonly, a user interface requires action by a user to accomplish a task, while a great user experience can be achieved with no user interface at all.

There are two main types of user interfaces:

1. Text-Based User Interface or Command-Line Interface: In text based user interface, such programs used in which input and output consisted of characters. In these interfaces, no graphics are displayed and user’s commands are entered through keyboard. This method relies mainly on the keyboard for example UNIX. Programs that are written for DOS ( Disk operating system) relied on text based interface (Bright Siaw Afriyie, 2007). This method is Easier to customize options and capable of more powerful task. Major drawback of a Text-Based User Interface are that it relies heavily on recall rather than recognition and Navigation is often more difficult.

2. Graphical User Interface: Graphical User interfaces depends on the mouse. These interfaces were developed in the decade of 1970s at Xerox Corporation’s Palo Alto research centre. Initially, these interfaces were not successful. Apple computer headed by Steve Jobs understood the benefits of GUI approach and incorporated a computer called Lisa but it was flopped. Fortunately, Apple tried again and made a success of Macintosh, first extensively used computer with graphical user (Bright Siaw Afriyie, 2007). Popular example of this method is any version of the Windows Operating System. The main advantages are that less expert knowledge is required to use it and easier to navigate. Currently, Graphical User -interfaces are dominant and user avoid text based user interface.

It has been documented that latest interfaces are classically variations or combinations of these two. Web-Based Interfaces are a type of GUI while Touchscreens are a type of GUI in which the touchscreen replaces the mouse.

Phases of the user interface development process:

User interface has experienced a revolution since development of touch screen. The touch screen capability has transformed way users interact with application. Touch screen allows the user to directly interact with what is showed and also removes any intermediate hand-held device like the mouse. Touch screen ability is utilized in smart phones, tablet, information kiosks and other information appliances.

4. Analytics:

Recently the analytics field is grown exponentially. Analytics is a process which helps to discover the informational patterns with data. The field of analytics is an amalgamation of statistics, computer programming and operations research. Analytics has shown growth in the arena of data analytics, predictive analytics and social analytics.

Data analytics is device used to support decision-making process. It converts raw data into meaningful information. Data analytics is applied in numerous organizations to allow companies and organization to take wise business decisions and in the sciences to prove or disprove existing models or theories. Data analytics focuses on inference, the process of deriving a conclusion based solely on the knowledge of researcher.

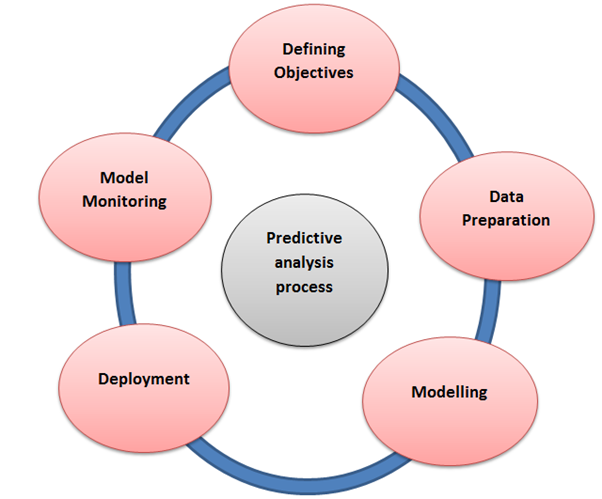

Predictive analytics: Predictive analytics is the subdivision of data mining that is concerned with forecasting probabilities. The technique utilizes variables that can be measured to predict the future behaviour of a person or other entity. Multiple predictors are united into a predictive model. In predictive modelling, data is collected to create a statistical model, which is tweaked as additional data becomes available. Basically, it is a tool that is used to forecast future events based on current and historical information. A typical example of predictive analytics at work is credit scoring. Credit risk models, which use information from each loan application to predict the risk of taking a loss, have been built and refined over the years to the point where they now play indispensable roles in credit decisions. Predictive models require data that describes what’s known at the time a prediction needs to be made, and the eventual outcome. Statistical techniques, such as linear regression and neural networks, are applied to recognise predictors and calculate the actual models.

predictive analytics process

Social media analytics: It is tool used by companies to comprehend and accommodate customer needs. Social Media Analytics is a dominant tool to discover customer sentiment from millions of online sources. Businesses are using the power of social media to gain a better understanding of their markets. However, they need deep analytics expertise to transform this “Big Data” into actionable insight.

Applications of social media analytics:

- Find the social strengths and shortcomings of your company and competitors.

- Identify the networks of influential supporters, detractors, and major players, and determine the best ways to reach out to them.

- Perform social research on a company before entering into a venture.

- Identify combustibles, or minor issues with low volume but high intensity that could grow in impact if not addressed early.

- Monitor new developments in industry and in other related industries.

Challenges of social media analytics:

- Huge amounts of data require lots of storage space and processing.

- Shifting social media platforms.

5. Software development life cycle:

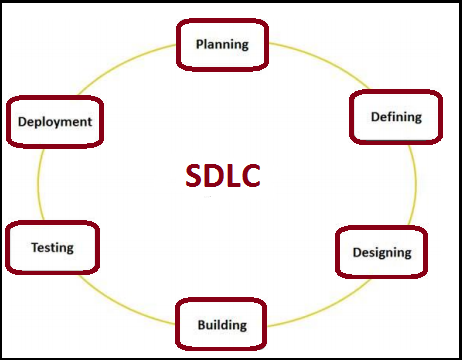

SDLC is a process followed for a software project, within a software companies. It include a detailed plan to explain how to develop, maintain, replace and alter or enhance specific software. The life cycle delineates a methodology for improving the quality of software and the general development process.

Graphical representation of the various stages of a typical SDLC

Agile development methodology is recent model of Software development life cycle.

A classic Software Development life cycle comprises of various stages:

Stage 1: Planning and Requirement Analysis: Requirement analysis is significant and fundamental stage in Software Development life cycle. It is done by the senior members of the team with inputs from the customer, the sales department, market surveys and domain experts in the industry. This information is then used to plan the basic project approach and to conduct product feasibility study in the economical, operational, and technical areas. Planning for the quality assurance requirements and identification of the risks associated with the project is also done in the planning stage.

Stage 2: Defining Requirements: In second stage, it is imperative to clearly define and document the product requirements and get them approved from the customer or the market analysts. This is done through ‘SRS’ Software Requirement Specification document which include all the product requirements to be designed and developed during the project life cycle.

Stage 3: Designing the product architecture: SRS is the reference for product architects to come out with the best architecture for the product to be developed. Based on the requirements specified in SRS, usually more than one design approach for the product. Architecture is proposed and documented in a DDS (Design Document Specification). This DDS is studied by all the important stakeholders and based on various parameters as risk assessment, product robustness, design modularity, budget and time constraints, the best design approach is selected for the product. A design approach clearly describes all the architectural modules of the product along with its communication and data flow representation with the external and third party modules. The internal design of all the modules of the proposed architecture should be clearly defined with the minutest of the details in DDS.

Stage 4: Building or Developing the Product: In this stage, the actual development begins and the product is developed. The programming code is generated as per DDS during this stage. If the design is performed in a comprehensive and organized manner, code generation can be accomplished without trouble. Developers have to follow the coding guidelines defined by their organization and programming tools such as compilers, interpreters, debuggers are used to generate the code. Different high level programming languages such as C, C++, Pascal, Java, and PHP are used for coding. The programming language is chosen with respect to the type of software being developed.

Stage 5: Testing the Product: This stage is generally a subsection of all the stages as in the modern Software Development life cycle models, the testing activities are mostly involved in all the stages. However this stage refers to the testing only stage of the product where products defects are reported, tracked, fixed and retested, until the product reaches the quality standards defined in the SRS.

Stage 6: Deployment in the Market and Maintenance: After completing testing of the product and it is ready to be deployed, it is released formally in the suitable market. Sometime product deployment happens in stages as per the organizations’ business strategy. The product may first be released in a limited segment and tested in the real business environment.

There are several models for SDLC:

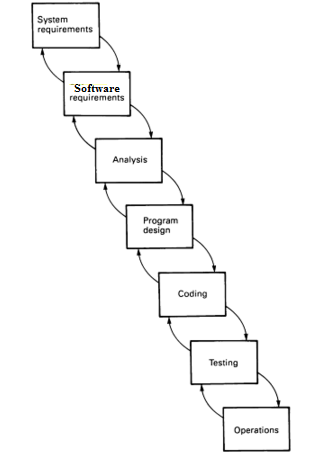

1. Waterfall: The Waterfall model is a traditional, linear, sequential waterfall software life cycle model. It was the first model which was widely used in the software industry. It is a successive development approach, in which development is seen as flowing steadily downwards through the phases of requirements analysis, design, implementation, testing (validation), integration, and maintenance.

In waterfall, development of one phase starts only when the previous phase is complete. Because of this nature, each phase of waterfall model is quite precise well defined. Since the phase’s falls from higher level to lower level, like a water fall, it is called waterfall model.

Classical waterfall model

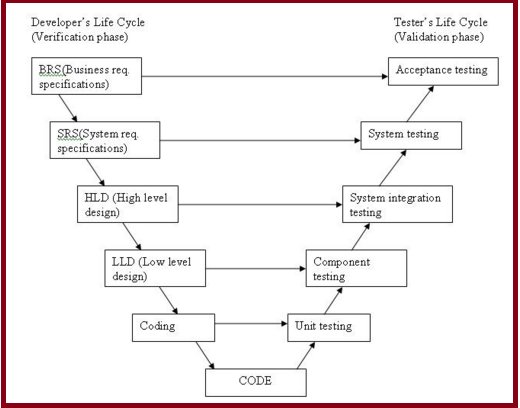

2. V shaped SDLC model: This model shows the relation of testing activities to analysis and design. It allows refining of previous development steps and fallback / correction.

This model is an extension of the waterfall model and is based on association of a testing phase for each corresponding development stage. This means that for every single phase in the development cycle there is a directly associated testing phase. This is a highly disciplined model and next phase starts only after completion of the previous phase.

Under V-Model, the corresponding testing phase of the development phase is planned in parallel. So there are Verification phases on one side of the .V. and Validation phases on the other side. Coding phase joins the two sides of the V-Model. V- Model application is almost same as waterfall model, as both the models are of sequential type. Requirements have to be very clear before the project starts, because it is usually expensive to go back and make changes. This model is used in the medical development field, as it is strictly disciplined domain. V-Model is applied in following scenario:

Requirements are well defined, clearly documented and fixed.

Product definition is stable.

Technology is not dynamic and is well understood by the project team.

There are no ambiguous or undefined requirements.

The project is short.

V-Model of SDLC

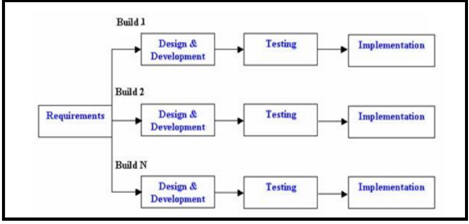

Iterative model of SDLC: In Iterative model, iterative process starts with a simple execution of a small set of the software requirements and iteratively improves the evolving versions until the complete system is implemented and ready to be deployed. An iterative life cycle model does not effort to start with a full specification of requirements. Instead, development begins by specifying and implementing just part of the software, which is then reviewed in order to identify further requirements. This process is then repeated, producing a new version of the software at the end of each iteration of the model.

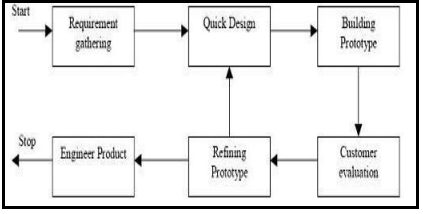

Prototype: Software prototyping is the development approach of activities during software development, the creation of prototypes that is incomplete versions of the software program being developed. When a prototype is generated, the developer produces the minimum amount of code necessary to explain the requirements or design elements under consideration. There is no effort to comply with coding standards, provide robust error management, or integrate with other database tables or modules. Consequently, it is generally more costly to retrofit a prototype with the necessary elements to produce a production module then it is to develop the module from scratch using the final system design document. Due to such reasons, prototypes are not applied for business use, and are generally crippled in one way or another to prevent them from being mistakenly used as production modules by end-users.

Prototype model

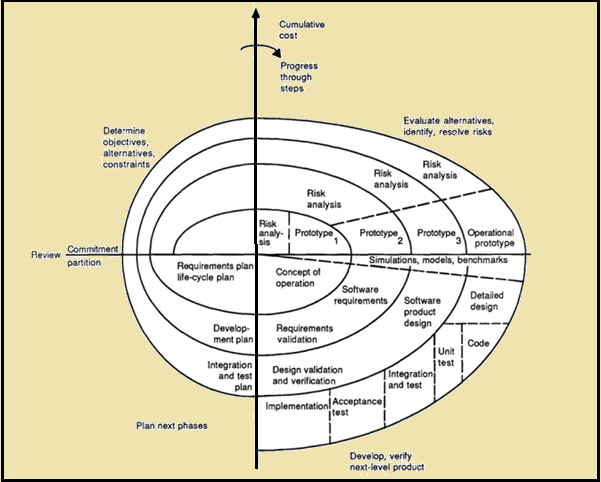

4. Spiral:

The spiral model is a software development process that combine elements of both design and prototyping-in stages. It is a meta-model, a model that can be used by other models. Sdlc spiral Model includes the iterative nature of the prototyping model and the linear nature of the waterfall model. This approach is ideal for developing software that is revealed in various versions.

In each iteration of the spiral approach, software development process follows the phase-wise linear approach. At the end of first iteration, the customer assesses the software and provides the feedback. Based on the feedback, software development process enters into the next iteration and afterward follows the linear approach to implement the feedback suggested by the customer. The process of iteration continues through the life of the software.

Benefits of Spiral model: Spiral model is very beneficial such as:

- High amount of risk analysis hence, avoidance of Risk is enhanced.

- It is good for large and mission-critical projects.

- Strong approval and documentation control.

- Extra Functionality can be added at a later date.

- Software is produced at initial stage in the software life cycle.

Drawbacks of Spiral model: Besides numerous benefits, SDLC shortfall in various ways:

- It can be a costly model to use.

- In this model, risk analysis requires highly specific expertise.

- Project's success is highly dependent on the risk analysis phase.

- Spiral model does not work well for smaller projects.

Spiral model is fit when costs and risk evaluation is important. It is good for medium to high-risk projects. Spiral model is applicable where there is long-term project commitment because of potential changes to economic priorities.

In brief, software development life cycle is a structure imposed on the development of a software product. It is often considered as a subset of system development life cycle.

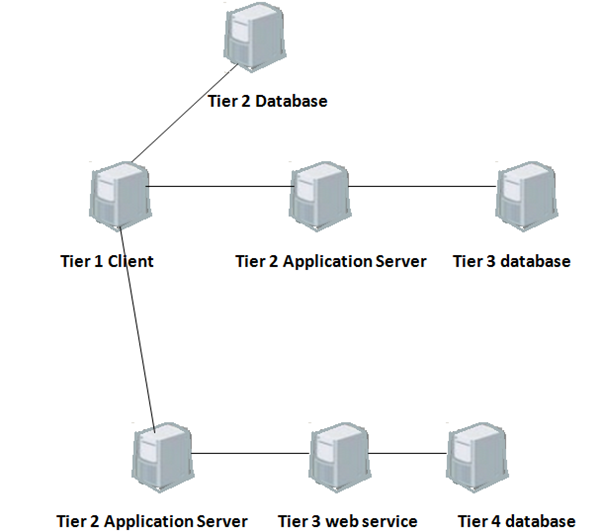

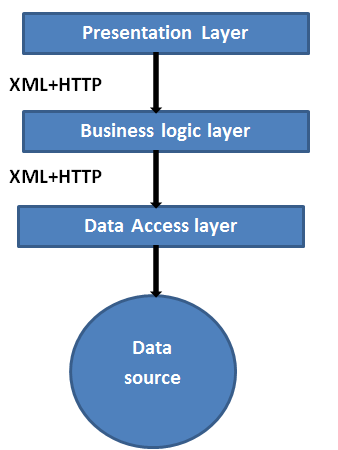

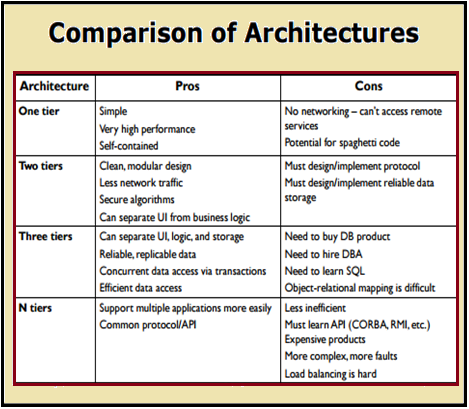

6. N tier architecture:

N-Tier architecture is one of the latest trend in information technology. It is defined as an industry-proved software architecture model which is appropriate to support enterprise-level client/server applications and resolve issues like scalability, security, fault tolerance. .NET has many tools and features, but .NET does not have pre-defined ways to protect how to implement N-Tier architecture. Therefore, in order to accomplish good design and implementation of N-Tier architecture in .NET, it is necessary to comprehend its concepts. Though, N-Tier architecture is used for many years but this notion is vague.

N tier architecture

It is established in technical documents that N-tier data applications are data applications that are divided into multiple tiers. Also called "distributed applications" and "multitier applications," n-tier applications separate processing into discrete tiers that are distributed between the client and the server. When experts develop applications that access data, they should have a clear separation between the various tiers that make up the application.

A typical n-tier application contains a presentation tier, a middle tier, and a data tier. The easiest way to separate the various tiers in an n-tier application is to create distinct projects for each tier that experts want to include in their application. Best example is the presentation tier might be a Windows Forms application, whereas the data access logic might be a class library located in the middle tier. Furthermore, the presentation layer might interconnect with the data access logic in the middle tier through a service. Separating application components into separate tiers increases the maintainability and scalability of the application. It does this by enabling easier implementation of new technologies that can be applied to a single tier without the requirement to reform the whole solution. Additionally, n-tier applications typically store sensitive information in the middle-tier, which maintains isolation from the presentation tier.

Advantages of N tier technology are as under:

- More powerful applications

- Many services to, many clients

- Enhanced security, scalability and availability

Disadvantages: There are some drawbacks of this technology:

- Software is more complex (effects design, reliability, maintainability)

- More complicated to design and model

- Performance risks

- Not sure how to achieve reliability

- Software maintenance is very different

Challenges of N Tier technology:

- Communication and distribution is usually handled by third-party middleware (CORBA, EJB, DCOM, etc)

- Software becomes heterogeneous and parallel

- A lot of new technologies to learn

- Designing truly reusable objects is difficult

- The design must be high quality

- They may not satisfy the needs of future systems

To summarize, field of information technology has made great progression and changes since last decade. Observing development in 2015, the IT industry is growing at speedy rate, with demand, investment and technological competence. It is because of new technologies and applications are implemented to strengthen everyday business processes. Recently, it is observed that it has great impact on business and it will help companies to serve customers better. Information Technology has become a part of the society.